A new artificial intelligence created by researchers at the Massachusetts Institute of Technology pulls off a staggering feat: by analyzing only a short audio clip of a person's voice, it reconstructs what they might look like in real life.

The AI's results aren't perfect, but they're pretty good - a remarkable and somewhat terrifying example of how a sophisticated AI can make incredible inferences from tiny snippets of data.

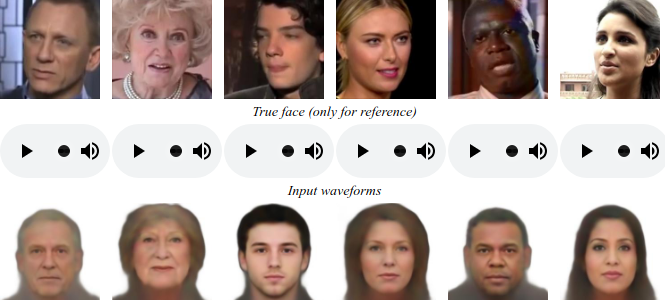

In a paper published this week to the preprint server arXiv, the team describes how it used a deep network architecture, trained by videos from YouTube and elsewhere online, to analyze short voice clips and reconstruct what the speaker might look like.

In practice, the Speech2Face algorithm seems to have an uncanny knack for spitting out rough likenesses of people based on nothing but their speaking voices.

(Speech2Face)

(Speech2Face)

The MIT research isn't the first to recreate a speaker's physical characteristics based on voice recordings.

Researchers at Carnegie Mellon University recently published a paper on a similar algorithm, which they presented at the World Economic Forum last year.

The MIT researchers urge caution on the project's GitHub page, acknowledging that the tech raises worrisome questions about privacy and discrimination.

"Although this is a purely academic investigation, we feel that it is important to explicitly discuss in the paper a set of ethical considerations due to the potential sensitivity of facial information," they wrote, suggesting that "any further investigation or practical use of this technology will be carefully tested to ensure that the training data is representative of the intended user population."

This article was originally published by Futurism. Read the original article.

Editor's note (via Futurism): This story mistakenly identified Speech2Voice as a Carnegie Mellon University project, not an MIT one. It has also been updated with technical details about the MIT project and background about previous work at Carnegie Mellon.