Unlike computers, which come pre-loaded with software that contains millions of lines of syntax-based programming code, humans aren't born equipped with any pre-existing language built into our brains. So how do we learn to communicate in the real world using human languages?

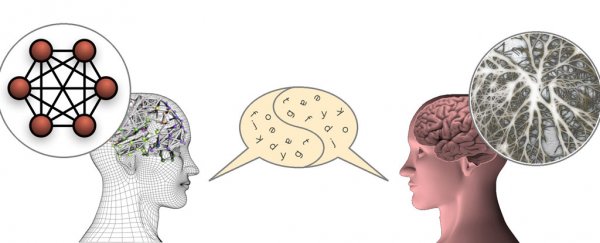

To better understand this process, a team of scientists from Italy and the UK has developed a network of artificial neurons designed to replicate what humans do automatically. The new cognitive system is comprised of some 2 million interconnected artificial neurons, which might be a lot less than the hundred billion or so neurons human brains have, but it's enough for the artificial network to learn how to communicate.

And communicate it has, effectively teaching itself how to converse in human language by engaging in dialogue with a human interlocutor, as the researchers describe in PLOS ONE.

The system has been codenamed ANNABELL, which stands for Artificial Neural Network with Adaptive Behaviour Exploited for Language Learning).

"The results of this work show that the ANNABELL model is able to learn, starting from a tabula rasa [blank slate] condition, how to execute and to coordinate different cognitive tasks, as processing verbal information, storing and retrieving it from long-term memory, directing attention to relevant items and organising language production," the researchers write. "The proposed model can help to understand the development of such abilities in the human brain, and the role of reward processes in this development."

ANNABELL is designed to replicate the theoretical premise that the human brain develops higher cognitive skills from scratch, simply by interacting with the environment. According to the researchers, the network's success in processing language has actually confirmed this, as they slowly asked it more and more questions (about 1,500 input sentences) until it was able to incrementally respond with about 500 answers (output sentences) of its own.

To keep things simple, the language the researchers introduced to ANNABELL was early childhood English, pitched at about the level of a four-year-old child. Over a number of sessions where a human interlocutor communicated with the network through a text-based interface, ANNABELL progressively learned nouns, verbs, adjectives, pronouns and other kinds of words, and was able to structure its own responses with them.

You can see an example of these kinds of conversations here, in which the researchers feed the network a set of information related to activities at certain locations, such as school, home and play centres, and then ask it to respond to a series of questions about what occurred.

But how does ANNABELL learn the language so that it can meaningfully engage in these kinds of conversations? According to the researchers, it's through the system's recreation of two key mechanisms that are also present in our own brains: synaptic plasticity, where neurons increase their efficiency by being active simultaneously, and neural gating, where neurons act as switches to either block or transmit signals from one part of the brain to another.

In short, ANNABELL uses synaptic plasticity to learn how to control signals that open and close neural gates, which controls the flow of information in the system's artificial brain.

But even though the researchers have already succeeded in teaching a computer to communicate at the approximated level of a four-year-old child, they have no plans of stopping there.

"The next step will be to incorporate the model in a robot to extend it and test it further by studying the interaction between robots and humans," said Bruno Golosio, one of the researchers from the University of Sassari in Italy.