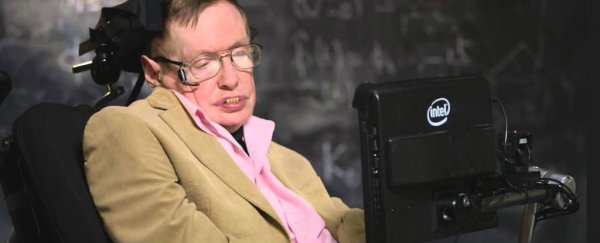

Physicist Stephen Hawking has warned humanity that we probably only have about 1,000 years left on Earth, and the only thing that could save us from certain extinction is setting up colonies elsewhere in the Solar System.

"[W]e must … continue to go into space for the future of humanity," Hawking said in a lecture at the University of Cambridge this week. "I don't think we will survive another 1,000 years without escaping beyond our fragile planet."

The fate of humanity appears to have been weighing heavily on Hawking of late - he's also recently cautioned that artificial intelligence (AI) will be "either the best, or the worst, thing ever to happen to humanity".

Given that humans are prone to making the same mistakes over and over again - even though we're obsessed with our own history and should know better - Hawking suspects that "powerful autonomous weapons" could have serious consequences for humanity.

As Heather Saul from The Independent reports, Hawking has estimated that self-sustaining human colonies on Mars are not going to be a viable option for another 100 years or so, which means we need to be "very careful" in the coming decades.

Without even taking into account the potentially devastating effects of climate change, global pandemics brought on by antibiotic resistance, and nuclear capabilities of warring nations, we could soon be sparring with the kinds of enemies we're not even close to knowing how to deal with.

Late last year, Hawking added his name to a coalition of more than 20,000 researchers and experts, including Elon Musk, Steve Wozniak, and Noam Chomsky, calling for a ban on anyone developing autonomous weapons that can fire on targets without human intervention.

As the founders of OpenAI, Musk's new research initiative dedicated to the ethics of artificial intelligence, said last year, our robots are perfectly submissive now, but what happens when we remove one too many restrictions?

What happens when you make them so perfect, they're just like humans, but better, just like we've always wanted?

"AI systems today have impressive but narrow capabilities," the founders said.

"It seems that we'll keep whittling away at their constraints, and in the extreme case they will reach human performance on virtually every intellectual task. It's hard to fathom how much human-level AI could benefit society, and it's equally hard to imagine how much it could damage society if built or used incorrectly."

And that's not even the half of it.

Imagine we're dealing with unruly robots that are so much smarter and so much stronger than us, and suddenly, we get the announcement - aliens have picked up on the signals we've been blasting out into the Universe and made contract.

Great news, right? Well, think about it for a minute. In the coming decades, Earth and humanity isn't going to look so crash-hot.

We'll be struggling to mitigate the effects of climate change, which means we'll be running out of land to grow crops, our coasts will be disappearing, and anything edible in the sea is probably being cooked by the rapidly rising temperatures.

If the aliens are aggressive, they'll see a weakened enemy with a habitable planet that's ripe for the taking. And even if they're non-aggressive, we humans certainly are, so we'll probably try to get a share of what they've got, and oops: alien wars.

As Hawking says in his new online film, Stephen Hawking's Favourite Places, "I am more convinced than ever that we are not alone," but if the aliens are finding us, "they will be vastly more powerful and may not see us as any more valuable than we see bacteria".

Clearly, we need a back-up plan, which is why Hawking's 1,000-year deadline to destruction comes with a caveat - we might be able to survive our mistakes if we have somewhere else in the Solar System to jettison ourselves to.

That all might sound pretty dire, but Hawking says we still have a whole lot to feel optimistic about, describing 2016 as a "glorious time to be alive and doing research into theoretical physics".

While John Oliver might disagree that there's anything good about 2016 at all, Hawking says we need to "Remember to look up at the stars and not down at your feet."

"Try to make sense of what you see, wonder about what makes the Universe exist. Be curious," he told students at the Cambridge lecture. "However difficult life may seem, there is always something you can do and succeed at. It matters that you don't just give up."