For better or worse, Pokémon Go changed our lives, and now it's hard to imagine life without those plucky virtual creatures sneaking up on you when you're trying to pee.

But get ready for it, because researchers from MIT have kicked the augmented reality game up a notch by inventing a program that allows virtual objects like Pokémon to interact with real-world environments.

Yep, this means one day you could be snaring a Ponyta as it prances through a field (you monster) or wasting 20 Pokéballs on a Zubat that keeps messing up your curtains.

The technology, dubbed Interactive Dynamic Video not only allows animated characters move about the world - it allows them to realistically affect objects in the environment, like Pikachu rustling the leaves of a bush in the footage below.

"This technique lets us capture the physical behaviour of objects, which gives us a way to play with them in virtual space," Abe Davis from MIT's Computer Science and Artificial Intelligence Laboratory told MIT News. "By making videos interactive, we can predict how objects will respond to unknown forces and explore new ways to engage with videos."

So how does it work?

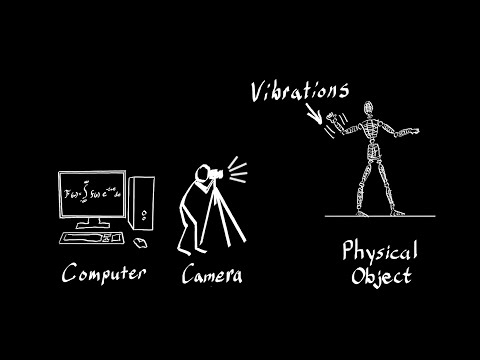

The technology uses traditional cameras to analyse the invisible vibrations given off by certain objects in the frame. These 'vibration modes' are then fed through a number of algorithms at different frequencies that represent the distinct ways that an object can move.

Based on data captured from just 5 seconds of footage, the program can build realistic simulations of an object's motions on the screen in front of you.

"If you want to model how an object behaves and responds to different forces, we show that you can observe the object respond to existing forces and assume that it will respond in a consistent way to new ones," says Davis, adding that they've even made it work on some existing YouTube videos.

Not only does the technique allow programmers to drop Pokémon or other animated characters into an environment and have them realistically interact with it, it also works in reverse, allowing users to interact with a virtual world, and poke and prod at objects 'inside' video footage.

Think plucking at the strings of a guitar in a video, or telekinetically controlling the leaves of a bush.

"When you look at VR companies like Oculus, they are often simulating virtual objects in real spaces," says Davis. "This sort of work turns that on its head, allowing us to see how far we can go in terms of capturing and manipulating real objects in virtual space."

Their research has been published in ACM Transactions on Graphics, and you can check out more of its capabilities below. We are so ready to have this in our life.