Google has announced another big push into artificial intelligence, unveiling a new approach to machine learning where neural networks are used to build better neural networks - essentially teaching AI to teach itself.

These artificial neural networks are designed to mimic the way the brain learns, and Google says its new technology, called AutoML, can develop networks that are more powerful, efficient, and easy to use.

Google CEO Sundar Pichai showed off AutoML on stage at Google I/O 2017 this week - the annual developer conference that Google throws for app coders and hardware makers to reveal where its products are heading next.

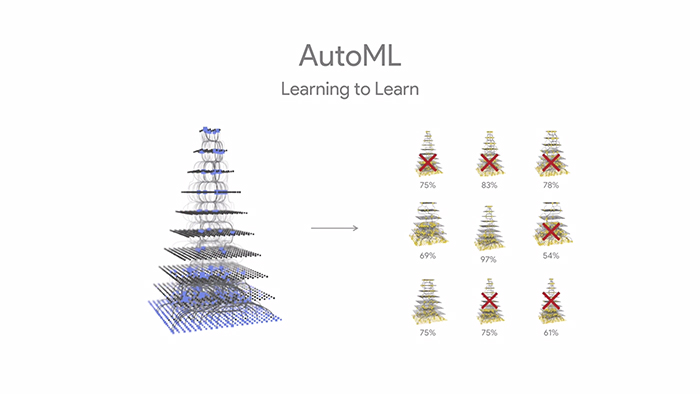

"The way it works is we take a set of candidate neural nets, think of these as little baby neural nets, and we actually use a neural net to iterate through them until we arrive at the best neural net," explains Pichai.

That process is called reinforcement learning, where computers can link trial and error with some kind of reward, just like teaching a dog new tricks.

It takes a massive amount of computational power to do, but Google's hardware is now getting to the stage where one neural net can analyse another.

Neural nets usually take an expert team of scientists and engineers a significant amount of time to put together, but thanks to AutoML, almost anyone will be able to build AI systems to tackle whatever tasks they like.

"We hope AutoML will take an ability that a few PhDs have today and will make it possible in three to five years for hundreds of thousands of developers to design new neural nets for their particular needs," Pichai writes in a blog post.

One neural net selects others. Credit: Google

One neural net selects others. Credit: Google

Machine learning - getting computers to make their own decisions based on sample data - is one approach to developing artificial intelligence, and involves two major steps: training and inference.

Training is exactly that, so it might involve a computer looking at thousands of pictures of cats and dogs to learn what kind of pixel combinations combine for each animal. The inference part is where the system then takes what it's learned to make educated guesses of its own.

Replace cats and dogs with neural nets, and you have some idea of how AutoML works, but instead of recognising animals, it's recognising which systems are smartest.

Based on the results Google has seen, AutoML might be even smarter at recognising the best approaches to solving a problem than the human experts. That potentially takes a huge amount of work out of the process of building the AI systems of the future, because they can be partly self-built.

AutoML is still in its early stages, Google says, but AI, machine learning, and deep learning (the advanced machine learning technique designed to mimic the brain's neurons) are all finding their way into the apps we use every day.

In demos on stage at I/O, Google showed off how its machine learning technology could brighten up a dark picture or remove obstructions in images, all based on the training its gotten from millions of other sample snaps.

Google says its computers are now even better at recognising what's in a photo than humans are. An app coming soon called Google Lens will be able to identify flowers for you, or businesses on a street, through your phone's camera.

These super-powered, deep learning algorithms are also finding their way into the field of health, where image processing systems can recognise the signs of cancer with even greater accuracy than the professionals.

With the help of AutoML, our AI platforms should get more intelligent more quickly, though it might be a while before you see the benefits in your Android camera app. Before then, app developers and scientists will be able to tap into AutoML.

As Google research scientists, Quoc Le and Barret Zoph, explain: "We think this can inspire new types of neural nets, and make it possible for non-experts to create neural nets tailored to their particular needs, allowing machine learning to have a greater impact to everyone."