AI image generators have become remarkably proficient in a very short period, capable of creating faces that are considered to be more realistic than the real thing.

However, a new study points to a way that we can improve our AI-face detection capabilities.

Researchers from the UK tested the face-assessing capabilities of a group of 664 volunteers, consisting of super-recognizers (who have shown a high level of skill for comparing and recognizing real faces in previous studies), and people with typical face-recognition abilities.

Related: AI Can Now Learn What Faces You Find Attractive Directly From Your Brain Waves

Both groups found AI faces hard to detect, though the super-recognizers did better, as expected.

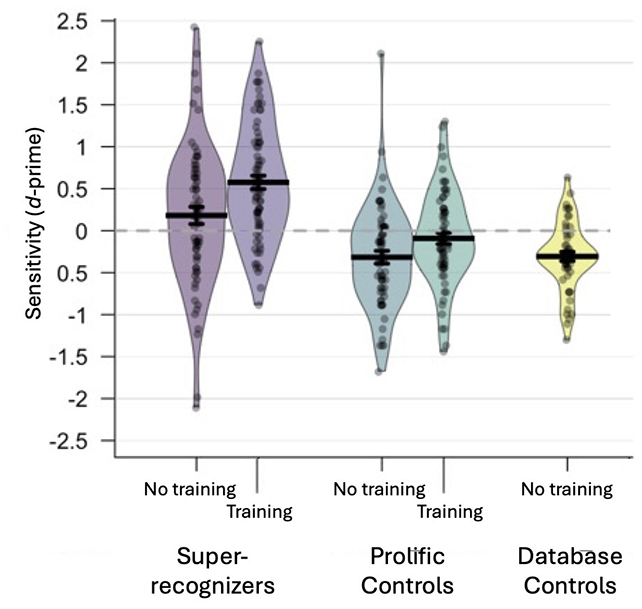

Significantly, super-recognizer participants who went through a brief 5-minute training session before being tested were better at distinguishing real faces from AI-generated ones.

"AI images are increasingly easy to make and difficult to detect," says psychology researcher Eilidh Noyes, from the University of Leeds.

"They can be used for nefarious purposes, therefore it is crucial from a security standpoint that we are testing methods to detect artificial images."

The study involved two different tasks, both with and without training. In the first, volunteers were shown a single face and asked to decide if it was AI; in the second, they were shown a real face and an AI face, and asked to spot the fake.

A different group of people participated in each experiment.

In the group that didn't receive any training, super-recognizers correctly identified AI faces 41 percent of the time, while those with typical face-recognition abilities recognized AI faces just 31 percent of the time.

Considering exactly half the images were AI-generated, each participant had a 50 percent chance of guessing correctly, providing further evidence that AI portraits can look more real than life to our eyes.

In the group that received training, people with typical recognition abilities identified AI with an accuracy of 51 percent – about level with random chance. The super-recognizers saw their accuracy score boosted to 64 percent though, correctly spotting the AI faces well over half the time.

The participants were trained to spot some of the tell-tale signs that a face has been made by AI, including missing teeth and strange blurring around the edges of hair and skin.

"Our study shows that the use of super-recognizers – people with very high face recognition ability – combined with training may help in the detection of AI faces," says Noyes.

AI typically makes faces through what's known as a generative adversarial network (GAN). Two sets of algorithms work in tandem: one to generate faces, and one to assess the realism of the faces against actual humans. This feedback loop then drives the image generator to a very realistic-looking result.

AI images can now be made quickly and easily, and are increasingly used in all kinds of media, from fake dating profiles to identity-theft scams. Training may help more individuals avoid being misled.

Related: The Real AI Risk Isn't Mass Unemployment, Economist Warns

"Our training procedure is brief and easy to implement," says Katie Gray, a psychology researcher at the University of Reading.

"The results suggest that combining this training with the natural abilities of super-recognizers could help tackle real-world problems, such as verifying identities online."

The research has been published in Royal Society Open Science.