Scientists have developed a new device that makes it easier to measure our brain activity when we communicate, finding evidence of how our brains 'align' when we share information.

By spotting when and how different people's brain activity syncs up during communication, the researchers hope to better understand how information can be conveyed more effectively, and why some messages get lost in translation.

The analysis is made possible by a special wearable brain-imaging device, developed by researchers at Drexel University and Princeton University, which uses a system called functional near-infrared spectroscopy (fNIRS for short).

fNIRS uses light to measure brain activity through oxygen in blood cells, and because the device can be worn easily, subjects are monitored as they interact face-to-face – something that's not possible when people have to recline inside bulky fMRI scanners.

"Now that we know fNIRS is a feasible tool, we are moving into an exciting era when we can know so much more about how the brain works as people engage in everyday tasks," says one of the team, Banu Onaral from Drexel University.

In other words, this scanner could tell when you really are tuned in, and when you've zoned out.

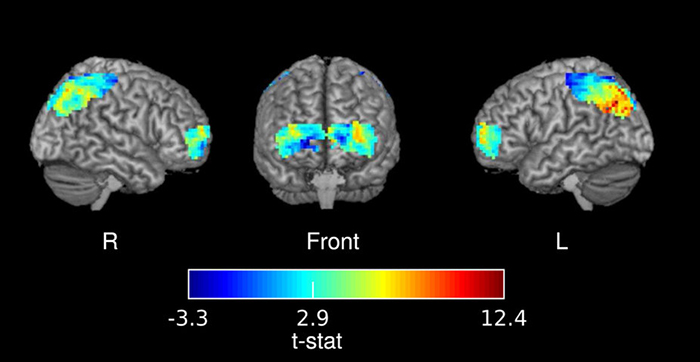

One of the scans identifying brain alignment. Credit: Drexel University

One of the scans identifying brain alignment. Credit: Drexel University

To check the potential of the fNIRS headband, the researchers had a native English speaker and two native Turkish speakers each wear one of the devices, and tell an unrehearsed, real-life story in their native language.

Those stories, plus another recorded at a live storytelling event, were played to 15 English-speaking listeners, who were also wearing fNIRS headbands.

The scientists focussed on the prefrontal and parietal areas of the brain – regions linked to reasoning and understanding, as well as discerning the beliefs and goals of other people.

As expected, the brain activity of the listeners only matched up with that of the storytellers when the English stories were used – the stories the listeners could actually understand.

Through the fNRIS scans conducted from the headband, the team noted matching patterns in oxygenated and deoxygenated haemoglobin concentrations in the brains of both the speakers and the listeners.

While we don't fully understand how these areas of the brain work, the fact that matching patterns were shown in the listeners after a short delay strongly suggests that some kind of message decoding is taking place.

What's more, the study results matched up with previous work on speaker-listener relationships done with fMRI scans – where similar correlations were spotted – establishing a new and reliable way of measuring brain coupling during social interaction.

It's only a preliminary study, with more detailed analyses to come, but at least now we know that this method for monitoring synced brain activity actually works.

In the future, the researchers say similar systems could be used to measure how well doctors communicate with their patients, the impact of different teaching methods, or how people react to television news.

"Being able to look at how multiple brains interact is an emerging context in social neuroscience," says one of the researchers, Hasan Ayaz from Drexel University.

"Now [we] have a tool that can give us richer information about the brain during everyday tasks – such as natural communication – that we could not receive in artificial lab settings or from single brain studies."

The findings have been published in Scientific Reports.