Weather forecasting is notoriously wonky - climate modeling even more so. But their increasing ability to predict what the natural world will throw at us humans is largely thanks to two things - better models and increased computing power.

Now, a new paper from researchers led by Daniel Klocke of the Max Planck Institute in Germany describes what some in the climate modeling community have described as the "holy grail" of their field - an almost kilometer-scale resolution model that combines weather forecasting with climate modeling.

Technically the scale of the new model isn't quite 1 sq km per modeled patch - it's 1.25 kilometers.

But really, who's counting at that point - there are an estimated 336 million cells to cover all the land and sea on Earth, and the authors added that same amount of "atmospheric" cells directly above the ground-based ones, making for a total of 672 million calculated cells.

Related: Southern Ocean Is Building a 'Burp' That Could Reignite Global Warming

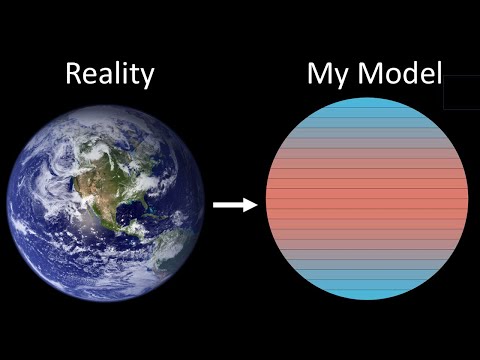

For each of those cells, the authors ran a series of interconnected models to reflect Earth's primary dynamic systems. They broke them into two categories - "fast" and "slow".

The "fast" systems include the energy and water cycles - which basically means the weather. In order to clearly track them, a model needs extremely high resolution, like the 1.25 km the new system is capable of.

For this model, the authors used the ICOsahedral Nonhydrostatic (ICON) model that was developed by the German Weather service and the Max Planck Institute for Meteorology.

Nerding out on climate modeling helps underpin the concepts in the paper:

"Slow" processes, on the other hand, include the carbon cycle and changes in the biosphere and ocean geochemistry. These reflect trends over the course of years or even decades, rather than a few minutes it takes a thunderstorm to move from one 1.25 km cell to another.

Combining these two fast and slow processes is the real breakthrough of the paper, as the authors are happy to agree. Typical models that would incorporate these complex systems would only be computationally tractable at resolutions of more than 40 km.

So how did they do it? By combining some really in depth software engineering with plenty of the most brand-spanking new computer chips money can buy. It's time to nerd out on some computer software and hardware engineering, so if you're not into that feel free to skip the next few paragraphs.

The model used as the basis for much of this work was originally written in Fortran - the bane of anyone who has ever tried to modernize code written before 1990.

Since it was originally developed, it had become bogged down with plenty of extras that made it difficult to use in any modern computational architecture. So the authors decided to use a framework called Data-Centric Parallel Programming (DaCe) that would handle the data in a way that is compatible with modern-day systems.

Simon Clark tests whether a climate model can run on much simpler hardware - a Raspberry Pi:

That modern system took the form of the JUPITER and Alps, two supercomputers located in Germany and Switzerland respectively, and both of which are based on the new GH200 Grace Hopper chip from Nvidia.

In these chips, a GPU (like the type used in training AI - in this case called Hopper) is accompanied by a CPU (in this case from ARM, another chip supplier, and labeled Grace).

This bifurcation of computational responsibilities and specialities allowed the authors to run the "fast" models on the GPU to reflect their relatively rapid update speeds, while the slower carbon cycle models were supported by the CPUs in parallel.

Separating out the computational power required like that allowed them to utilize 20,480 GH200 superchips to accurately model 145.7 days in a single day. To do so, the model used nearly 1 trillion "degrees of freedom", which, in this context, means the total number of values it had to calculate. No wonder this model needed a supercomputer to run.

Unfortunately, that also means that models of this complexity aren't coming to your local weather station anytime soon.

Computational power like that isn't easy to come by, and the big tech companies are more likely to use it on squeezing every last bit out of generative AI that they can, no matter what the consequences for climate modeling.

But, at the very least, the fact that the authors were able to pull off this impressive computational feat deserves some praise and recognition - and hopefully one day we'll get to a point where those kinds of simulations become commonplace.

The research is available as a preprint on arXiv.

This article was originally published by Universe Today. Read the original article.