While we're not yet close to replicating the complexity and the intricacy of the human brain with anything artificial, scientists are making progress with certain dedicated devices – like a newly developed programmable resistor.

Resistors can be used to make up analog neural networks in artificial intelligence systems, based on a structure designed to mimic the human brain.

This latest device can process information around a million times faster than brain synapses that link neurons together.

In particular, the artificial synapse is intended to be used in analog deep learning, an approach to progressing AI that improves speeds while reducing energy use – which is important for affordability as well as the demands on the planet's natural resources.

Key to the significant improvements in this latest resistor is the use of a specially selected and efficient inorganic material. The team behind the project says the gains in AI neural network learning speeds promise to be major.

"Once you have an analog processor, you will no longer be training networks everyone else is working on," says computer scientist Murat Onen from the Massachusetts Institute of Technology (MIT).

"You will be training networks with unprecedented complexities that no one else can afford to, and therefore vastly outperform them all. In other words, this is not a faster car, this is a spacecraft."

The inorganic material in question is based on phosphosilicate glass (PSG) – silicon dioxide with phosphorus added. Used as the solid electrolyte in the resistor, its nanoscale pores allow protons to pass through it at never-before-seen speeds when pulses of 10 volts are applied to the setup.

Even better, PSG can be manufactured using the same fabrication techniques that are deployed to make silicon circuitry. This should make it easier to integrate into existing production processes without much of an increase in costs.

In the brain, synapses are strengthened or weakened in order to control the flow of signals and other information. Here, controlling the movement of protons to affect electrical conductance has the same effect. It's fast, it's reliable, and it can all operate at room temperature, making it more practical too.

"The speed certainly was surprising," says Onen.

"Normally, we would not apply such extreme fields across devices, in order to not turn them into ash. But instead, protons ended up shuttling at immense speeds across the device stack, specifically a million times faster compared to what we had before.

"And this movement doesn't damage anything, thanks to the small size and low mass of protons. It is almost like teleporting."

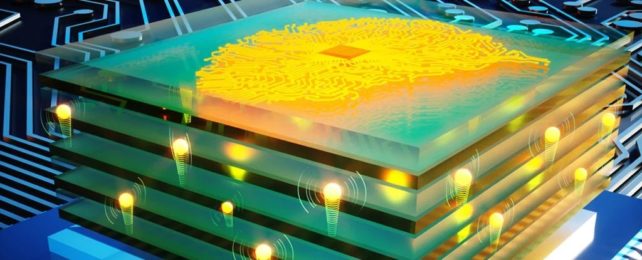

The massive potential here is for much faster AI training using less energy. To create a workable neural network, resistors would be stacked together in chess board-style arrays, which can be operated in parallel to improve speeds.

As for the next step, the researchers will have to take what they've learned about developing this resistor and adapt it so it can be produced on a larger scale. That won't be easy, but the team is confident it can be done.

The end result would be seen in AI systems that take on tasks such as identifying what's in images or processing natural voice commands.

Anything where artificial intelligence has to learn by analyzing huge amounts of data could potentially be improved. That extends to fields such as self-driving cars and medical image analysis as well.

Further study will enable these resistors to be embedded into actual systems, and to overcome potential performance bottlenecks that currently limit the voltage that can be applied.

"The path forward is still going to be very challenging, but at the same time, it is very exciting," says computer scientist and study author Jesús del Alamo, from MIT.

The research has been published in Science.