Behold the FlatScope, an innovative implant scientists hope could one day beam sensory information straight to your brain – skipping the need to repair eyes or ears to restore vision or hearing.

Not only that, the team behind the FlatScope says it has the potential to capture brain activity in much more detail than currently possible, perhaps monitoring and stimulating several million neurons in the cortex (our grey matter) by the time it's finished.

One of the main long-term objectives for the researchers at Rice University is being able to scan the brain at a deep enough level to figure out the fundamentals of sensory input – and that's key in eventually learning how to control those sensory inputs.

"The inspiration comes from advances in semiconductor manufacturing," says one of the team, Jacob Robinson. "We're able to create extremely dense processors with billions of elements on a chip for the phone in your pocket. So why not apply these advances to neural interfaces?"

Researchers are developing the FlatScope in partnership with the DARPA defence agency and as part of the Neural Engineering System Design (NESD) program, with the aim of developing a fully functioning, implantable system that enables communication between the brain and the digital world.

"Such an interface would convert the electrochemical signaling used by neurons in the brain into the ones and zeros that constitute the language of information technology, and do so at far greater scale than is currently possible," says DARPA.

Eventually, it could allow partially sighted or blind people to see the world through a camera attached to their shirt, though that's still a long way off.

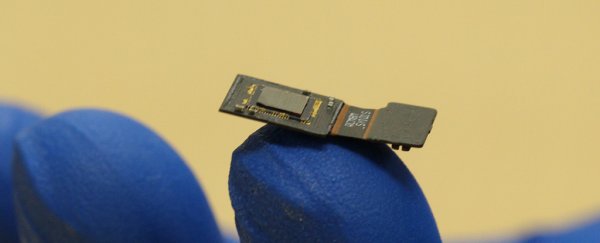

Right now all the team has is a prototype that sits on top of the brain and detects optical signals from neurons in the cortex.

Rather than the 16 electrodes that the best brain monitoring systems have today, FlatScope could deal in thousands when it's ready to go, the researchers say.

They're working with bioluminescence experts to figure out ways of getting neurons to release photons when triggered, creating a light show that reveals more about the inner workings of the brain.

The team is also building on work they've done with the FlatCam: a super-thin, low-powered camera sensor that could be used in an implant.

Yet another challenge is putting together algorithms that can crunch through the data coming from the brain and make sense of the 3D maps of light and neuron activity being fed back.

And after all those minor hurdles are overcome, scientists will also need to work out how the whole device gets powered and transmits and receives data wirelessly.

That's certainly plenty to be getting on with – the team has received funding for the next four years – but if this interface can be perfected then damaged human senses could be replaced by digital equivalents. Either that or we can generate the most immersive virtual reality world ever.

We're looking forward to seeing how the project develops over the next few years, and you can stay up to date through the Rice University Electrical and Computer Engineering Department.