When a child first does something without being asked, their parents usually celebrate. Researchers at the University of Southern California (USC) are having that moment right now.

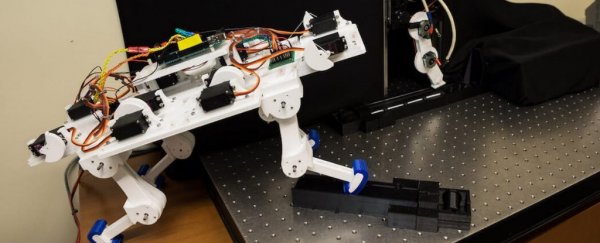

The team claims to have created the first AI-controlled robotic limb that can learn how to walk without being explicitly programmed to do so.

The algorithm they used is inspired by real-life biology. Just like animals that can walk soon after birth, this robot can figure out how to use its animal-like tendons after only five minutes of unstructured play.

"The ability for a species to learn and adapt their movements as their bodies and environments change has been a powerful driver of evolution from the start," explains co-author Brian Cohn, a computer scientist at USC.

"Our work constitutes a step towards empowering robots to learn and adapt from each experience, just as animals do."

Today, most robots take months or years before they are ready to interact with the rest of the world. But with this new algorithm, the team has figured out how to make robots that can learn by simply doing. This is known in robotics as "motor babbling" because it closely mimics how babies learn to speak through trial and error.

"During the babbling phase, the system will send random commands to motors and sense the joint angles," co-author Ali Marjaninejad an engineer at USC, told PC Mag.

"Then, it will train the three-layer neural network to guess what commands will produce a given movement. We then start performing the task and reinforce good behavior."

(Matthew Lin)

(Matthew Lin)

All of this means that when roboticists are writing code, they no longer need exact equations, sophisticated computer simulations, or thousands of repetitions to refine a task.

Instead, with this new technology, a robot can build its own internal mind map of its limbs and its environments, perfecting movement with its three-tendon, two-joint limb and interactions with its surroundings as it grows and learns.

Depending on their first moments of life, the researchers noticed that some robots even developed personal gaits.

"You can recognize someone coming down the hall because they have a particular footfall, right?" explains co-author Francisco Valero-Cuevas, a biomedical engineer at USC.

"Our robot uses its limited experience to find a solution to a problem that then becomes its personalized habit, or 'personality' - We get the dainty walker, the lazy walker, the champ… you name it."

It's a feat that biologists and roboticists have long dreamed of, and the authors claim it could give future robots the "enviable versatility, adaptability, resilience and speed of vertebrates during everyday tasks."

The possibilities for the technology are only constrained by our imaginations.

With this powerful new algorithm, we might be able to provide more responsive prosthetics for people with disabilities, or we could send robots to safely explore space or perform search-and-rescue attempts in dangerous or unknown terrain.

"I envision muscle-driven robots, capable of mastering what an animal takes months to learn, in just a few minutes," says Dario Urbina-Melendez, another member of the team, and biomedical engineer at USC.

"Our work combining engineering, AI, anatomy and neuroscience is a strong indication that this is possible."

This study has been published in Nature Machine Intelligence.