EMBARGO Wednesday 19 July 1600 BST | 1500 GMT | Thursday 20 July 0100 AEST

Back when the Universe was still just a wee baby Universe, there wasn't a lot going on chemically. There was hydrogen, with some helium, and a few traces of other things. Heavier elements didn't arrive until stars had formed, lived, and died.

Imagine, therefore, the consternation of scientists when, using the James Webb Space Telescope to peer back into the distant reaches of the Universe, they discovered significant amounts of carbon dust, less than a billion years after the Big Bang.

The discovery suggests that there was some means of enhanced carbon production in the tumultuous early Universe – probably from the deaths of massive stars, spewing it out into space as they die.

"Our detection of carbonaceous dust at redshift 4-7 provides crucial constraints on the dust production models and scenarios in the early Universe," write a team led by cosmologist Joris Witstok of the University of Cambridge in the UK.

The first billion years of the Universe's life known as the Cosmic Dawn, following the Big Bang 13.8 billion years ago, was a critical time. The first atoms formed; the first stars; the first light bloomed in the darkness. But it took stars themselves to forge significant quantities of elements heavier than hydrogen and helium.

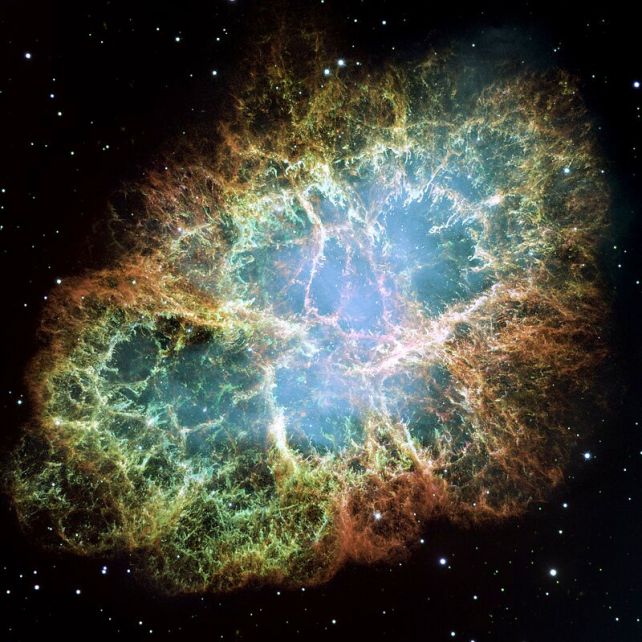

In the hot, dense nuclear furnaces of their cores, stars smash atoms together, fusing them into heavier elements in a process called stellar nucleosynthesis. But these heavier elements largely just accumulate in the star until it runs out of fusion material and dies, spewing its contents out into the space around it. It's a process that usually takes some time.

Witstok and his colleagues used the JWST to study dust hanging about during the Cosmic Dawn, and spotted something strange. They found an unexpectedly strong feature in the spectrum associated with the absorption of light from carbon-rich dust, in galaxies as early as just 800 million years after the Big Bang.

The problem is that these dust grains are thought to take some hundreds of millions of years to form, and the characteristics of the galaxies suggest they are too young for this formation timescale. But it's not an impossible problem to resolve.

The first stars in the Universe were thought to be a lot more massive than the younger stars we see around us today. Since more massive stars burn through their fuel reserves more quickly, they would have lived relatively short lives, exploding in supernovae that could have spread heavier material relatively early.

There are also stars that are around today that are absolute dust factories. They are called Wolf-Rayet stars, massive stars that have reached the end of their life, on the brink of supernova. They don't have much hydrogen left, but they have a lot of nitrogen or carbon, and they are in the process of ejecting mass at a very high rate. That ejecta is also high in carbon.

The discovery of high amounts of carbon in multiple galaxies during the cosmic dawn could be evidence that these processes were not just occurring, but were more common during the early Universe than they are in more recent space-time.

In turn, this suggests that huge stars were the norm for the first generation, helping to explain why we don't see any of them still hanging around the Universe today.

The research has been published in Nature.